In my last post, the idea of MidiNet is introduced. However, how are we going to design the machine learning model to achieve our concept?

Declaration: This post is just to present the idea and overview, since the MidiNet is still in progress, so please forgive me for not showing some specific information. The technical details would be updated with the latest publication of the MidiNet project.Project Overview

The MidiNet project plans to mimic the way that human learns music, that is to say, we tend to schedule our progress in several stages:

Stage1.

Just like the first step that a teacher would do, to give some easy examples, and introduce the theory from the basic components.Method:

In this stage, we start from designing a model wich can generate simple melody by using the generative adversarial networks. The generated length is fixed into one bar.

Teasor...

Unlike GAN in the image tasks, there haven't been any feature representation that is generally acknowledged in the case of music generation. So our first goal is to design a conpact feature that could help the GAN learn efficiently.

The drum clip above is what the GAN generate by using one of my self-designed symbolic feature representation. The component(note), the velocity, and the length is all generated by the trained model.

Stage2.

After the student could play some simple melody, the teacher go on to the idea of bars, chords, and zoom out to the segments than a full song.Method:

Again, train another GAN models by given some real chord combinations. Meanwhile, add the chord labels as the condition of GAN in stage1(melogy generator). While combining these two GAN models, we hope to generate segment of harmonic melody, that fits the chord combinations in real music.

Repeat the concept above, while zooming out to segments like intro, chorus, verse....

Stage3.

Time for the challenge.Method:

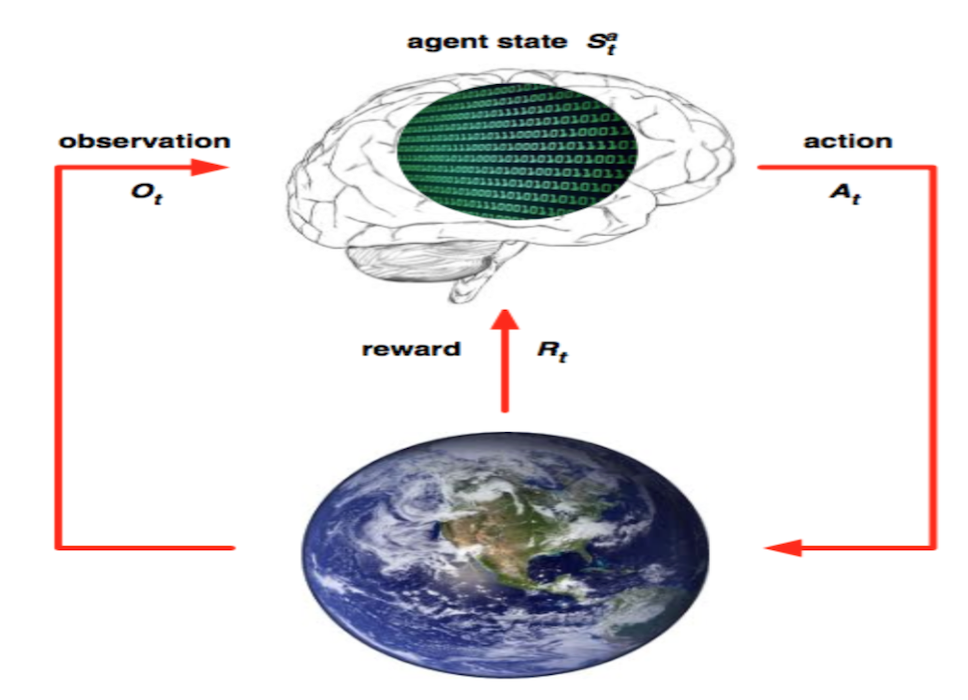

In the last stage, the model could be connected to other MIR models, such as chord/ genre recognition, emotion recognition, or simply some music assesment models. In this stage, I would like to borrow the concept of reinforcement learning to explain how we could take advantage of these models:

Imagine the main RL agent is our music generator, and an emotion recognizer is part of the RL environment. The main RL agent's task is to produce sad music, in which we could set the reward by the given output of emotion recognizer. In addition, we could use the observation concept of RL to be the switch of adding conditions to the generator.

The Dream

Recalls the title, the MidiNet is my dream to the general solution of music generation. If you want a sad rock music, set the conditions and let MidiNet do the rest. If you need a composer assistant, enter what you've written and let MidiNet carry on the job. This dream is about an A.I. that can brings the joy of music to every individual, no matter music educated or not, into a new level.